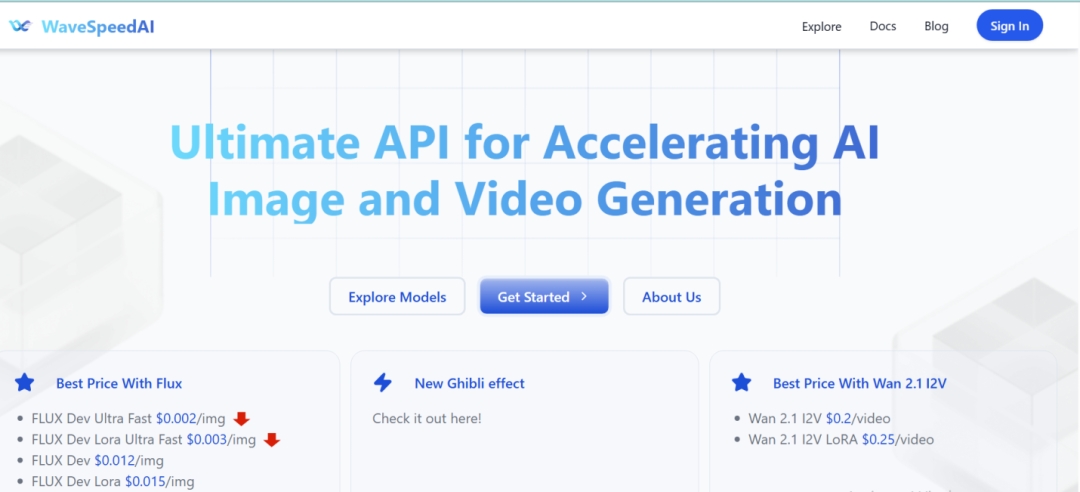

By optimizing FLUX-dev—a state-of-the-art text-to-image and image-to-image model—on DataCrunch’s NVIDIA B200 infrastructure, WaveSpeedAI’s proprietary inference framework delivers ultra-low latency and cost-efficiency. Enterprises can now generate high-quality images at unprecedented speeds, slashing API response times and reducing costs by up to 60% per image.

“This milestone isn’t just about speed—it’s about redefining what’s possible for generative AI at scale,” said Zeyi Cheng, founder and CEO of WaveSpeedAI. “By co-designing software with Blackwell’s architecture, we’ve turned computational challenges into opportunities for creativity and accessibility.”

FLUX-dev, renowned for its superior prompt adherence and scene composition, leverages a 12B-parameter hybrid architecture of diffusion transformers. However, its computational demands historically hindered real-time deployment. WaveSpeedAI’s framework, optimized for NVIDIA’s B200 GPUs, addresses these challenges through:

- Custom CUDA kernels and kernel fusion to eliminate CPU bottlenecks.

- BF16/FP8 mixed precision via Blackwell’s 5th-gen tensor cores, balancing speed and quality.

- Memory optimization leveraging the B200’s 180GB HBM3E and 7.7TB/s bandwidth.

- Latency-first scheduling to prioritize user experience over batch processing.

The B200’s innovations—including Tensor Memory (TMEM) and CTA pairs — enable WaveSpeedAI to maximize GPU utilization. It achieves 2.25 PFLOPS in BF16 performance, doubling the H100’s capabilities.

Benchmarks reveal the WaveSpeedAI-DataCrunch solution reduces latency to under 1 second per image (vs. 6 seconds on H100 baselines), enabling use cases like live design collaboration, dynamic ad generation, and interactive media. Cost per image plummets as efficiency rises, making large-scale deployment economically viable.

“DataCrunch’s mission is to democratize access to elite hardware,” said a DataCrunch spokesperson. “Partnering with WaveSpeedAI ensures enterprises harness the B200’s full potential today, with GB200 clusters on the horizon.”

WaveSpeedAI plans to extend its framework to video generation, targeting near-real-time inference for gaming, film, and virtual applications. The collaboration will also explore NVIDIA’s upcoming GB200 NVL72 clusters, promising further leaps in performance.

About WaveSpeedAI:

Founded by AI pioneer Zeyi Cheng, WaveSpeedAI specializes in accelerating generative AI inference. Its proprietary engine powers applications demanding speed, quality, and scalability, from creative tools to enterprise solutions.

About DataCrunch:

DataCrunch delivers high-performance GPU cloud solutions, empowering innovators with instant access to the latest hardware, including NVIDIA’s Blackwell architecture.

Media Contact

Company Name: Wavespeed

Contact Person: Chengzeyi

Email: Send Email

Country: Singapore

Website: https://wavespeed.ai/